I’ve been wanting to experiment with adding Large Language Model (LLM) features to my current hobby project, the task-management app Dust.

In this post I’m focusing on a specific piece of the puzzle: deploying an HTTP server with LLM completion and embedding support to Fly.io.

(Want to skip the words? Thinking “just show me the code”? The complete recipe can be found on Github: https://github.com/luketurner/dust/tree/main/ai-server.)

This post assumes some preexisting knowledge of LLMs. If this is your first time hearing about them, you might want to check out some posts from Simon Willison:

- Catching up on the weird world of LLMs

- Embeddings: What they are and why they matter

- llamafile is the new best way to run a LLM on your own computer

Also, Andrej Karpathy’s Youtube channel has some great in-depth videos that explain how LLMs (and transformers and neural networks in general) work.

Okay. So you know what an LLM is. Let’s move on!

Why not OpenAI?

The simplest way to integrate an LLM into Dust would probably be to let users paste in their OpenAI API keys and use that to call the OpenAI chat completion and embedding APIs.

But this is a hobby project! I’m not trying to find the simplest approach. I want something self-managed, and ideally hosted on the same Fly.io platform that Dust itself is running on, so I can grapple with all the weird complexity of running LLMs in the cloud.

The goal is to have a “private service” (no public IP) that my other Fly apps can call via an API to do completions or embeddings, and that can continue working indefinitely with no 3rd party API requirements.

There’s also a practical reason to avoid building an app that uses the OpenAI API: it’s a moving target.

They’re regularly adding and removing models, and if, for example, I had written my app last year using the non-chat completions API, there’s a good chance the model would have been turned off.

It’s great that OpenAI is iterating on their models, but their API does not feel like a robust target for an application backend (yet). Do I want to recalculate everyone’s embeddings whenever OpenAI decides to deprecate the model I was using? Not really.

What’s a Llamafile?

A Llamafile is a single-file solution for running an LLM with llama.cpp. The single file includes the model itself, plus everything you need to use it. Specifically:

- Model weights

- CLI

- HTTP API

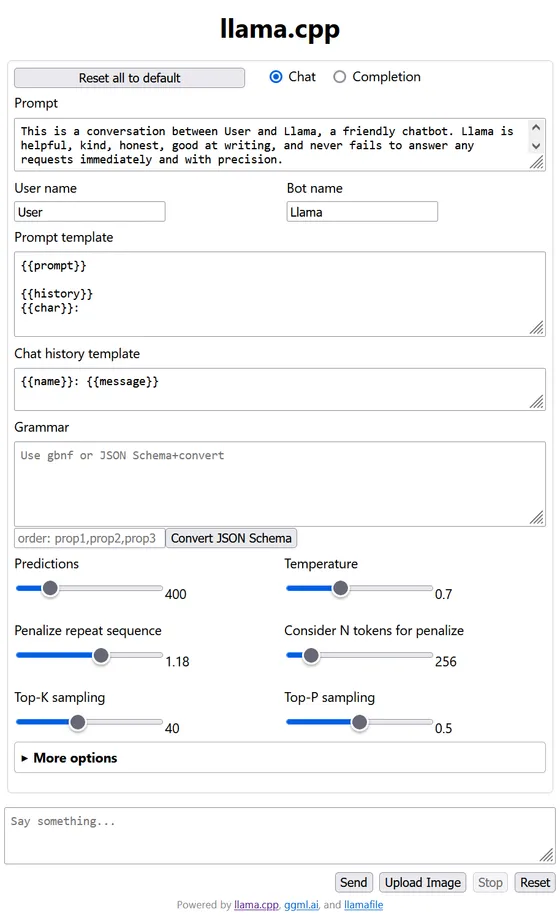

- Web UI (see screenshot at the top of post)

All in a single cross-platform executable file. (Llamafiles use Cosmopolitan Libc so the same binary can be run “almost” anywhere — Windows, Linux, OS X, etc.)

The reason I’m using Llamafiles for this project is their single-binary, zero dependency approach makes for easy cloud hosting.

Compare with some other (otherwise promising) options like vLLM, which require a full Python toolchain and even writing custom Python code to support embeddings, for example.

In short, Llamafiles seem like the easiest way to self-host an “LLM as a Service” (henceforth LLMaaS 😂) without writing custom server code or pulling in a complex dependency tree.

And the icing on the cake is it’s trivial to run the exact same model on my home (Windows) PC for local experimentation.

Considerations

Since we need a slightly large VM that could become expensive for a hobby project, we definitely don’t want our LLMaaS to be “highly available” (2+ machines).

In fact, to save money, the service should completely scale to zero when not in use.

Furthermore, because the model files can be multiple gigabytes, we don’t really want to embed them in the Docker image itself. (See Fly docs for some rationale here.)

Instead, we’ll create and provision a Fly volume to store the Llamafile. We’ll use a little helper script (called run.sh in this post) to download the file at runtime, which will only need to happen once.

Also, we don’t want our LLMaaS to be available to the public Internet. It’s a “private service” — the API can be called by our other Fly apps, and that’s it.

This is important because of the scale-to-zero functionality. If we expose this to the Internet and the service starts getting spurious requests, that basically costs us extra money.

Finally, for the Dust use-case it’s important to support both embedding and completion APIs. Embeddings will be used to assess similarity between tasks. Completion will be used to “intelligently” pick tasks to work on for the day.

LLMaaS: The recipe

We can deploy a LLMaaS to Fly with only three files:

- A

fly.toml. - A

Dockerfile. - A

run.shhelper script.

fly.toml

If you’ve used Fly before, you know about the fly.toml. This is a config file that tells Fly about the app you’re launching.

Our fly.toml looks like this (Make sure to update the app and primary_region to something for your app):

app = "FIXME"

primary_region = "FIXME"

[mount]

source="model_data"

destination="/data"

[build]

[http_service]

internal_port = 8080

auto_stop_machines = true

auto_start_machines = true

min_machines_running = 0

[[vm]]

cpu_kind = "shared"

cpus = 1

memory_mb = 1024A couple callouts:

- We did not set

force_https = truefor the HTTP service. Automatic HTTPS doesn’t work for services that are accessed via the Fly private network. - We specified a volume mount that we can use to store the model data outside of the Dockerfile.

You may also want to adjust the VM size depending on the model you want to use. One shared CPU with 1 GB memory is really the absolute bare minimum, useful only for demo/experimentation purposes.

Actually, Fly recently came out with the ability to get VMs with GPUs. If you want to run a flagship model, you might want to use that.

GPUs are expensive, though — minimum $2.50/hr. In comparison, the VM spun up by the above fly.toml would cost $5.70 per month.

Dockerfile

Since Llamafiles don’t have any special dependencies, the Dockerfile just needs to install wget (a dependency for our run.sh script):

FROM ubuntu:22.04

RUN apt-get update -qq && \

apt-get install --no-install-recommends -y ca-certificates wget && \

rm -rf /var/lib/apt/lists /var/cache/apt/archives

WORKDIR /app

COPY ./run.sh ./run.sh

ENTRYPOINT ["/bin/sh", "-c", "./run.sh"]run.sh

Then we put this in our run.sh:

#!/usr/bin/env bash

set -euo pipefail

LLAMAFILE_URL="https://huggingface.co/jartine/phi-2-llamafile/resolve/main/phi-2.Q2_K.llamafile"

MOUNT_DIR="/data"

LLAMAFILE="$MOUNT_DIR/llamafile"

if [ -a "$LLAMAFILE" ]; then

echo "Found existing Llamafile at $LLAMAFILE. Download skipped."

else

echo "Downloading $LLAMAFILE_URL..."

wget -qO "$LLAMAFILE" "$LLAMAFILE_URL"

chmod 700 "$LLAMAFILE"

echo "Done."

fi

"$LLAMAFILE" --server --host "fly-local-6pn" --embedding --nobrowserAdjust the LLAMAFILE_URL variable to point to whatever Llamafile you want to use. The example here is using a small (1.1GB) model so it’s fast to download and can run in a fairly small VM, but the results are not as good as those from a flagship model.

Also, make sure the MOUNT_DIR variable matches the mount destination in your fly.toml.

This script has two responsibilities:

- Download the Llamafile onto the mounted volume if it doesn’t already exist.

- Launch the Llamafile server.

Number (2) happens in this line:

"$LLAMAFILE" --server --host "fly-local-6pn" --embedding --nobrowserFeel free to tweak the Llamafile options here as needed. We’ve specified:

--server: Launch the server interface (not the CLI)--host "fly-local-6pn": Tells server to listen on the Fly IPv6 private network only. The default value here is127.0.0.1— local-only. If you wanted to expose this publicly with an IPv4 address, use0.0.0.0instead offly-local-6pn.--embedding: Enables the embedding API.--nobrowser: Prevents the Llamafile from trying to launch a browser window after startup. Note this does not disable the Web UI itself, just the automatic browser launch.

You can find a list of other supported options here.

Deployment

Now we have the three files we need, we’re ready to deploy our “LLMaaS”. Run the following, replacing the FIXME with the same region you put in the fly.toml:

flyctl launch --no-deploy

flyctl volume create model_data --region FIXME

flyctl deploy --ha=false --no-public-ipsBoom, it’s deployed!

But, because it has no public IPs, we can’t test our new LLM service with a *.fly.dev URL. This is by design, remember? It’s only available via the Fly private network.

But we can test it easily enough by using fly proxy to connect from our computer.

The only trick is that the proxy doesn’t trigger Fly’s load balancer autoscaling magic, so we need to manually start our machine before we can test it.

# temporarily scale up

flyctl machines start

# start the proxy

flyctl proxy 8081:8080Then we can open http://localhost:8081 in our browser and see the llama.cpp Web UI!

Once we launch the proxy, we can also test the API directly, without using the Web UI.

For example, to experiment with the embedding API:

curl -X POST http://localhost:8081/embedding \

--data '{"content": "Hello world"}'See the Llamafile server README for API endpoint documentation.

After ~5-10 minutes the machine will scale back down automatically.

And there we have it. An LLM as a Service, running in our private network in the cloud.

I’ve really only scratched the surface of the complexity here, and I’m still in the process of learning a lot myself, so I’ll draw the line for this post here.

But really, this is just the first step in the process of integrating useful AI features into an app.

For example, I’m currently experimenting with finding a good combination of LLM model, “options”, and prompts to produce results that Dust can actually consume. That might be the subject of a future post.